The Fundamental Limits of Embedding-Based Retrieval : Why Single Vector Embeddings Aren't Enough

Vector embeddings have become the backbone of modern retrieval systems, powering everything from search engines to Retrieval-Augmented Generation (RAG) systems. But what if I told you that these systems have fundamental mathematical limitations that no amount of training data or model scaling can overcome?

A groundbreaking paper from Google DeepMind, “Theoretical Limitations of Embedding-Based Retrieval”, challenges the widespread assumption that embedding models can handle all retrieval tasks effectively. Let’s dive into what this means for the future of AI systems.

The Core Problem

For any given embedding dimension d, there exists a combination of documents that cannot be returned by any query.

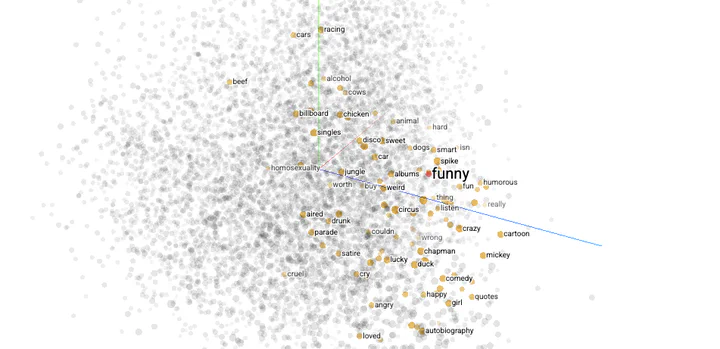

The research reveals a startling mathematical truth: for any given embedding dimension d, there exists a combination of documents that cannot be returned by any query. This isn’t a training problem or a data quality issue—it’s a fundamental constraint of representing complex semantic relationships in single vectors.

Think of it this way: if you’re trying to map the infinite complexity of human language and knowledge into a fixed-dimensional vector space, you’re bound to lose information. The paper proves this intuition mathematically.

Key Research Findings

Theoretical Limitations

The authors used learning theory to demonstrate that the number of retrievable document subsets is fundamentally limited by embedding dimension. Even with perfect training and infinite data, these constraints persist.

Empirical Evidence

To prove their point, the researchers created the LIMIT dataset —a stress-test designed to expose these theoretical limitations:

- 1000 queries

- 50,000 documents

- Only 46 actually relevant documents

The shocking result? Even state-of-the-art embedding models failed consistently, even when given optimal embeddings specifically for the test set.

Concrete Examples of Embedding Failures

1. Multi-Aspect Retrieval

Vector embeddings as they exist now, struggle when documents need to be retrieved based on multiple, potentially conflicting criteria.

Example: Finding documents that are simultaneously:

- Technical AND beginner-friendly

- Historical AND relevant to current events

- Comprehensive AND concise

A embeddings based on single vector paradigm can’t capture these nuanced trade-offs effectively.

2. Context-Dependent Relevance

The same document may be relevant or irrelevant depending on subtle query context.

Example:

- Query: “Python programming”

- A document about snake biology might be irrelevant

- But if the context is “animal names in programming languages,” it becomes highly relevant

3. Boolean Operations

When you need to retrieve documents based on logical combinations (AND, OR, NOT), current vector embeddings fundamentally break down.

Example: “Find documents about machine learning BUT NOT deep learning AND related to healthcare”

4. Dimensional Constraint Problem

If you have a 768-dimensional embedding (like BERT), there are mathematically proven document subsets that no query vector can retrieve, regardless of training quality.

Why This Matters for Real-World Applications

This research has profound implications for:

- RAG Systems: Current RAG implementations may miss crucial information due to these fundamental limitations

- Search Engines: Semantic search systems built on embeddings have inherent blind spots

- Recommendation Systems: Single-vector based embedding approaches may fail to capture complex user preferences

- Enterprise Knowledge Management: Organizations relying on embedding-based document retrieval may have systematic gaps

What This Means for AI Development

This research represents a crucial shift in how we think about retrieval systems. Instead of asking “How can we make better embeddings?”, we should be asking “What comes after single-vector embeddings?”

As AI systems become more complex—handling reasoning, following complex instructions, and managing vast knowledge bases—understanding these fundamental limits becomes critical. The solution isn’t just bigger models or more data; it’s rethinking our approach to information retrieval entirely.

Conclusion

The era of believing that single-vector embeddings can solve all retrieval problems is coming to an end. This paper provides the mathematical proof of what many practitioners have intuited: there are fundamental limits to what single vectors can represent.

For researchers and engineers building AI systems, this work is a wake-up call. It’s time to explore new paradigms that can overcome these theoretical constraints and build more robust, capable retrieval systems.

The future of information retrieval lies not in perfecting single-vector embeddings, but in moving beyond them entirely.

Paper: Theoretical Limitations of Embedding-Based Retrieval

Code: LIMIT Dataset Repository

Authors: Google DeepMind Research Team

Want to dive deeper? Check out the LIMIT dataset and run your own experiments to see these limitations in action.